About me

Hi there, this is Shufan Jiang. My current research interest includes Large Language Models, Multi-Agent Systems, and Scaling Environment for Agents.

I’m previously a research intern at miHoYo.

Research and Project

FURINA

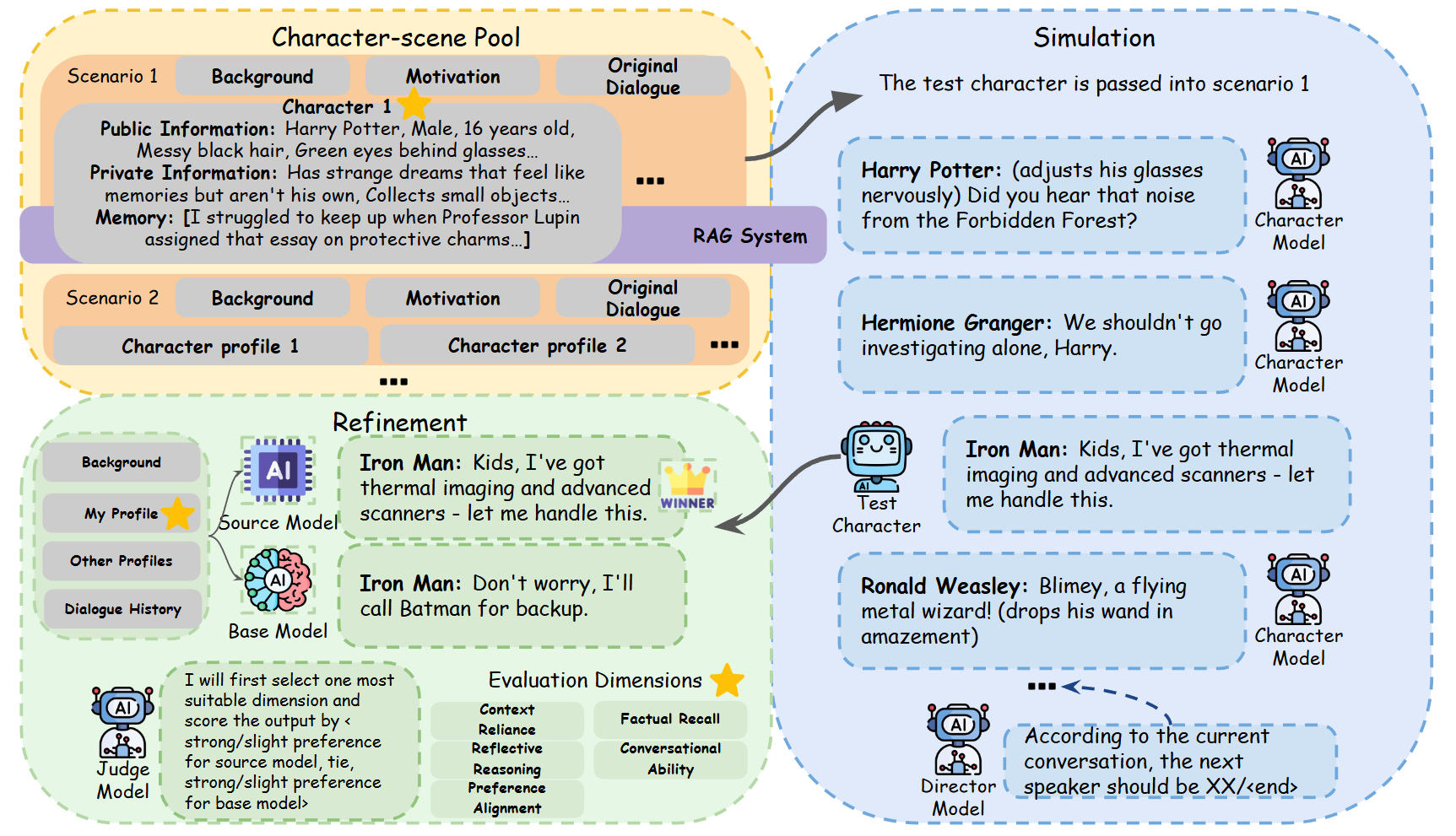

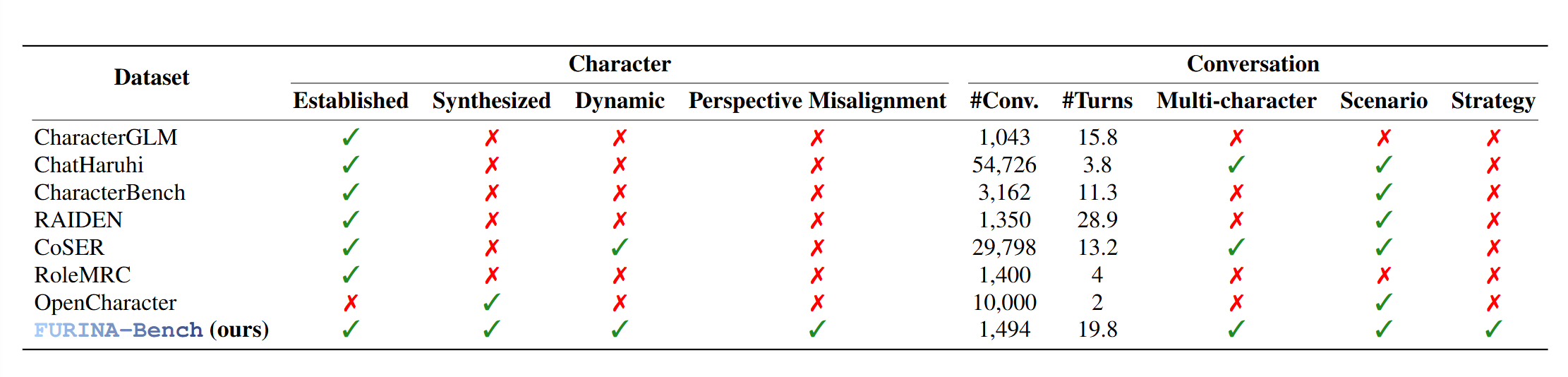

FURINA: A Fully Customizable Role-Playing Benchmark via Scalable Multi-Agent Collaboration Pipeline

Supervised by Prof.Chengwei Qin, The Hong Kong University of Science and Technology, China

- Authored a paper as the co-first author https://arxiv.org/pdf/2510.06800;

- Proposed FURINA-Builder, the first multi-agent collaboration pipeline for automatically constructing fully customizable RP benchmarks at arbitrary scales, targeting real-world challenges;

- Introduced FURINA-Bench, a comprehensive RP benchmark built with FURINA-Builder, which incorporates both established and synthesized test characters in group-chat scenarios, accompanied by fine-grained evaluation criteria. A preliminary analysis demonstrates that it facilitates clearer model separability and supports more robust evaluation.

HAMLET

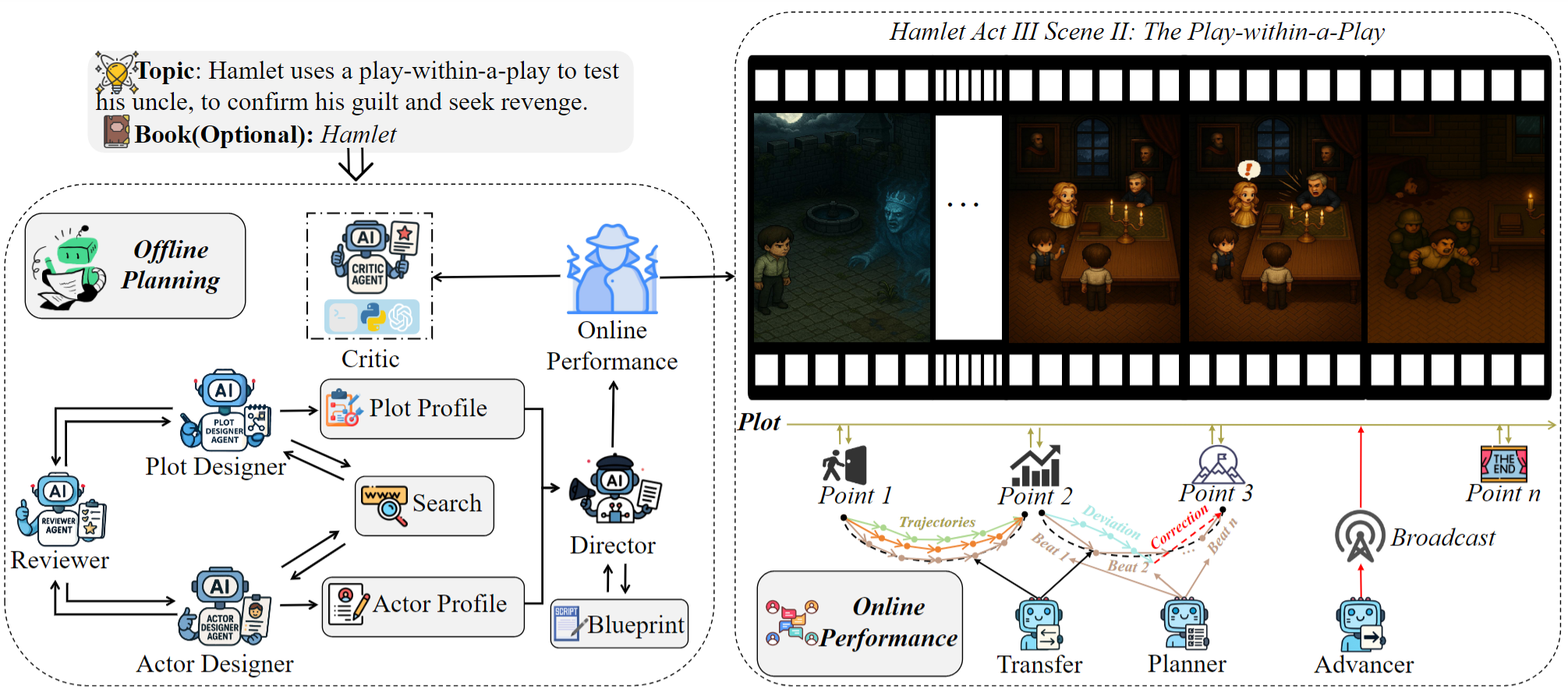

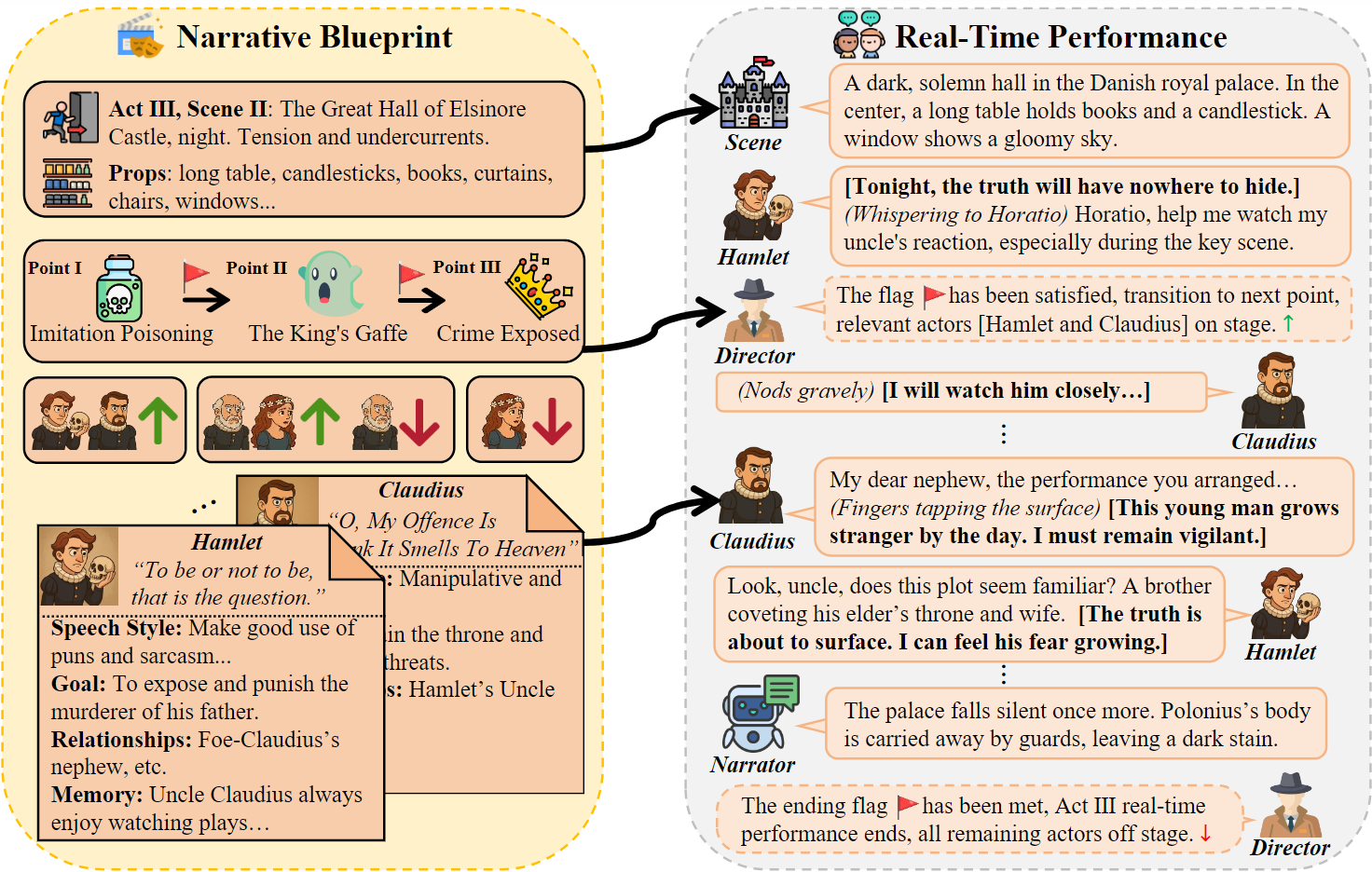

**HAMLET: Hyperadaptive Agent‑based Modeling for Live Embodied Theatrics **

Supervised by Prof. Xuelong Li, Institute of Artificial Intelligence (TeleAI), China Telecom, China

- Co‑first author; preprint: arXiv:2507.15518.

- Proposed HAMLET, a multi‑agent system that generates complete online drama performances from a single user instruction.

- Conducted model training, win‑rate evaluation, and ablation studies.

This is our demonstration of core multi-agent system design for AI-Driven Drama.

LINKs

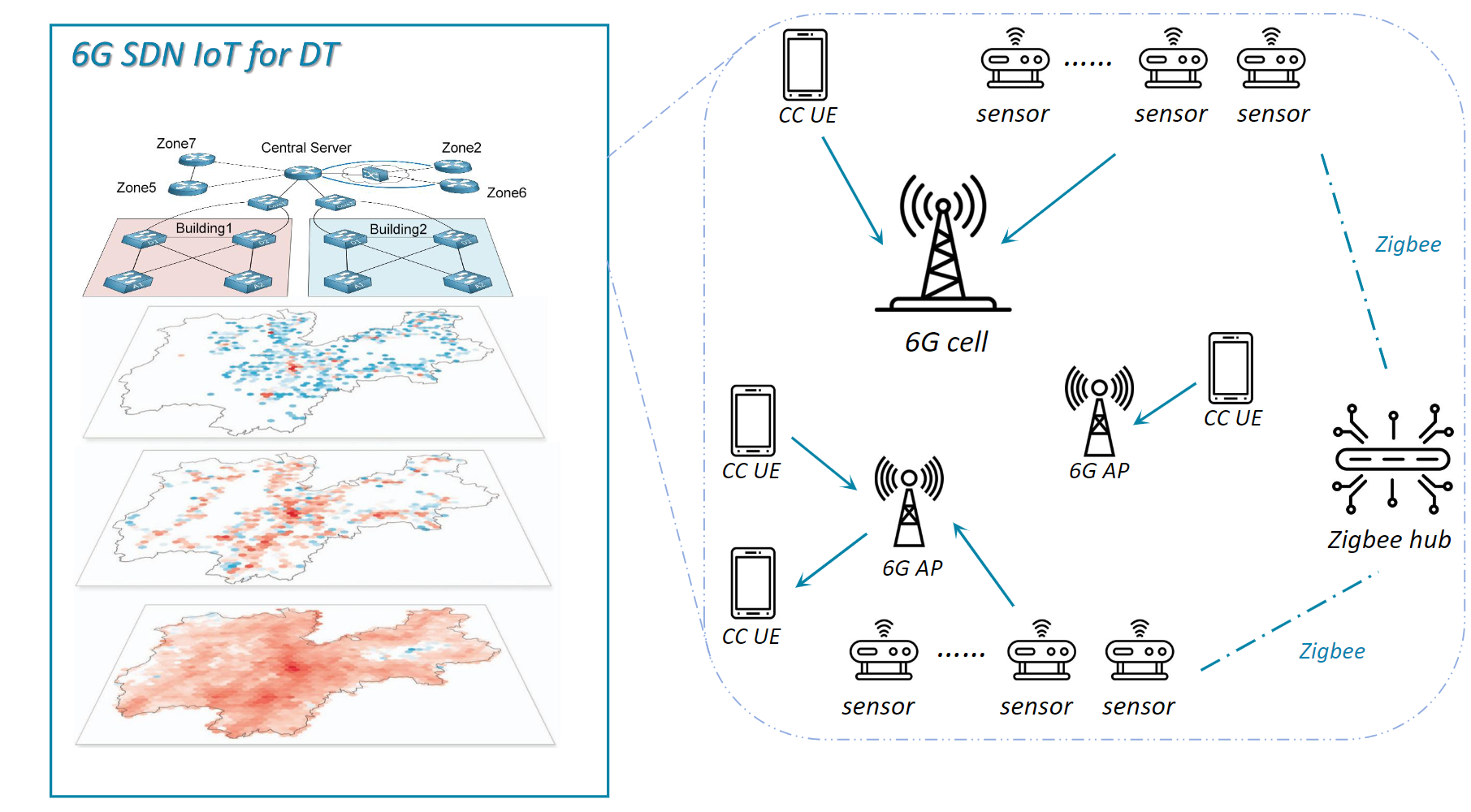

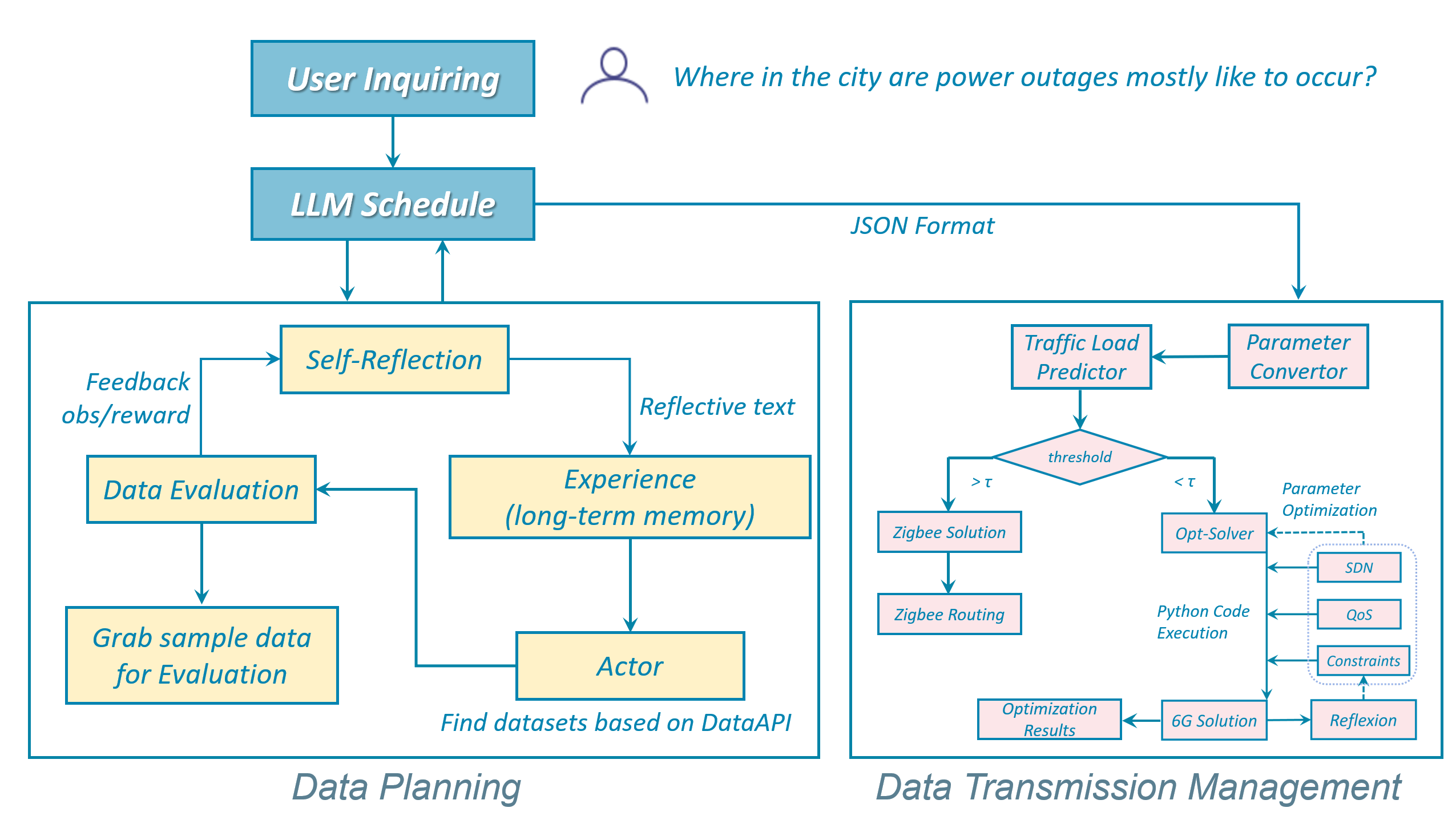

LINKs: LLM Integrated Management for 6G Empowered Digital Twin Networks

Supervised by Dr. Yue Wu, School of Information Science and Engineering, East China University of Science and Technology, China

- First author; accepted at IEEE VTC2024‑Fall; preprint: arXiv:2412.19811.

- Built an autonomous network management framework featuring multi‑agent collaboration, self‑reflection, and a comprehensive tool‑use stack.

- Fine‑tuned

TimesFMwithLoRA, achieving SOTA performance on network traffic prediction.

Island Life From Zero

Game Development

Supervised by Dr. Ke Fang, Tsinghua Shenzhen International Graduate School, Tsinghua University, China

- Designed an island‑themed social‑simulation game in Unity; published on itch.io.

- Implemented LLM‑driven NPCs with planning, reasoning, memory, and tool‑use.

- Enabled NPC skill evolution through interaction and collaboration.

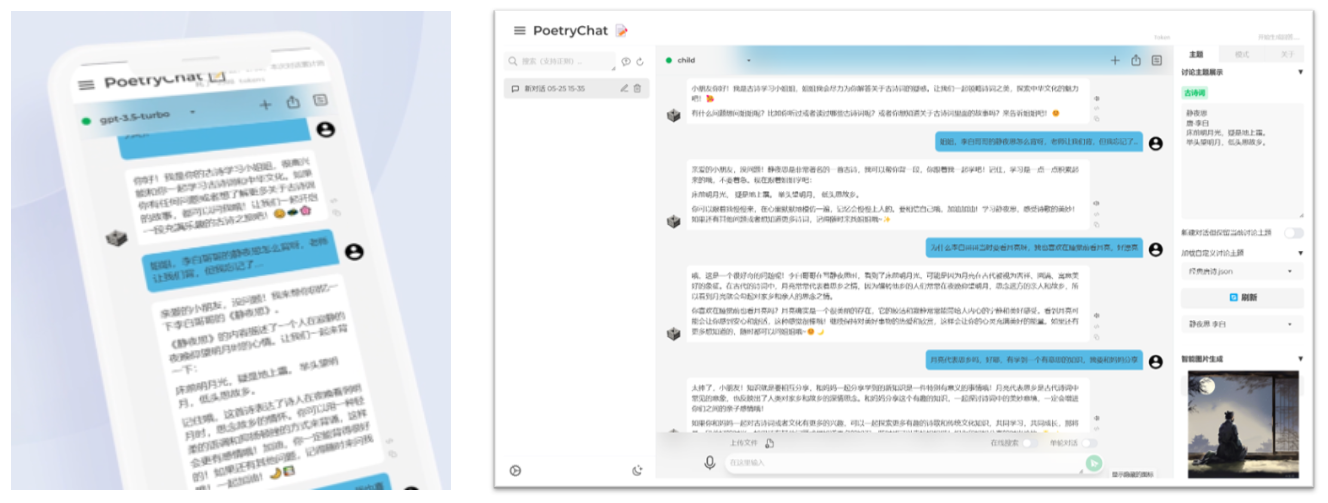

PoetryChat

LLM‑based Interactive Chinese Poetry Learning Assistant

- Designed structured prompts for text‑to‑image generation and dialogue across age groups; integrated with LangChain.

- Implemented multimodal RAG by extracting document images and re‑captioning with BLIP2‑OPT‑6.7B for cross‑modal alignment; built vector stores for retrieval.

- Developed a React web app with history tracking, theme switching, web search, dark mode, and RAG file upload.

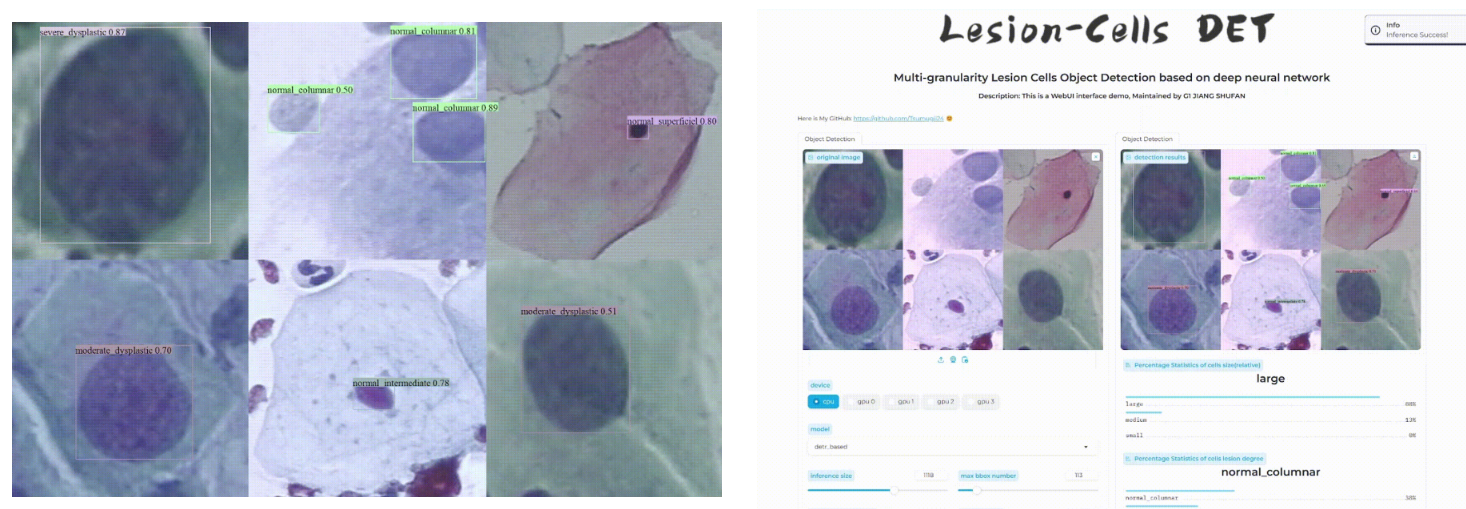

MGLCD

Multi‑Granularity Lesion Cell Detection Using Deep Neural Networks

Supervised by Prof. Pietro Liò, Department of Computer Science and Technology, University of Cambridge, UK

- Curated and annotated 2,000+ medical cell images across 7 categories from Kaggle, Hugging Face, and other sources.

- Preprocessed with OpenCV (denoising, deduplication) and Mosaic augmentation for diversity.

- Trained CNN and Transformer‑based detectors (YOLO series, ViT, DETR).

Publication

* denotes equal contribution

2025

[ICLR 2026] Haotian Wu*, Shufan Jiang*, Mingyu Chen, Yiyang Feng, Hehai Lin, Heqing Zou, Yao Shu, Chengwei Qin. FURINA: A Fully Customizable Role-Playing Benchmark via Scalable Multi-Agent Collaboration Pipeline.

[ICLR 2026] Sizhou Chen*, Shufan Jiang*, Chi Zhang, Xiao-Lei Zhang, Xuelong Li. HAMLET: Hyperadaptive Agent‑based Modeling for Live Embodied Theatrics.

2024

- [VTC 2024] Shufan Jiang, Bangyan Lin, Yue Wu, Yuan Gao. LINKs: LLM Integrated Management for 6G Empowered Digital Twin Networks.

Open Source Activities

Datawhale

- Delivered the talk How to Learn AI to over 200 attendees in AI+X Tour, ECUST.

- Supported learners as a Teaching Assistant (guidance, Q&A, grading).

- Co‑developed a summer camp course on Multimodal Data Synthesis with 1,000+ participants.

- Top‑3 core contributor to self‑llm (23k+ GitHub stars).

- Co‑leader of unlock‑deepseek, expanding and reproducing DeepSeek innovations.

CAMEL

- Integrated BFCL (Berkeley Function Calling Leaderboard) features into camel.

- Co‑designed multi‑agent tutorials with runnable code in handy‑multi‑agent.

Others

- Contributed to transformers by fixing Triton version checks for MXFP4 inference support.

- Contributed to LLaMA‑Factory by resolving bitsandbytes quantization compatibility issues.

Work Experience

2025 miHoYo

LLM Algorithm Research Intern, Lumi Group, miHoYo Network Technology Co., Ltd.

2024 Roche

AI-Based Drug Design and App Development Intern, CICoR, Roche R&D Center (China) Ltd.

2023 Bilibili

Development Intern (LLM Direction), Corporate Efficiency Department, Shanghai Bilibili Technology Co., Ltd.

2023 Meituan

Software Engineer Intern, Intelligent and Communication Technology Center, Hanhai Information Technology Co., Ltd.